2. K8s pod 调度策略

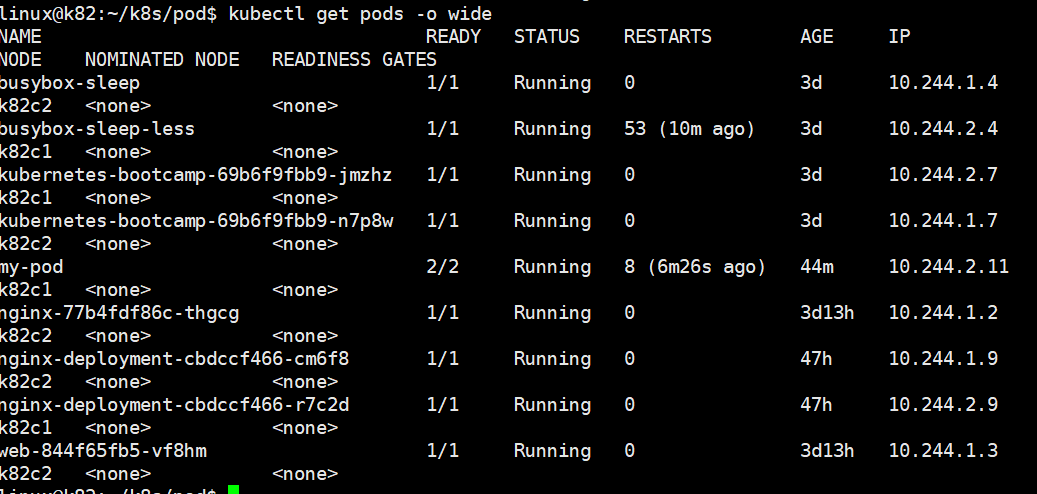

使用一下命令看到pod被分配的IP

kubectl get pods -o wide

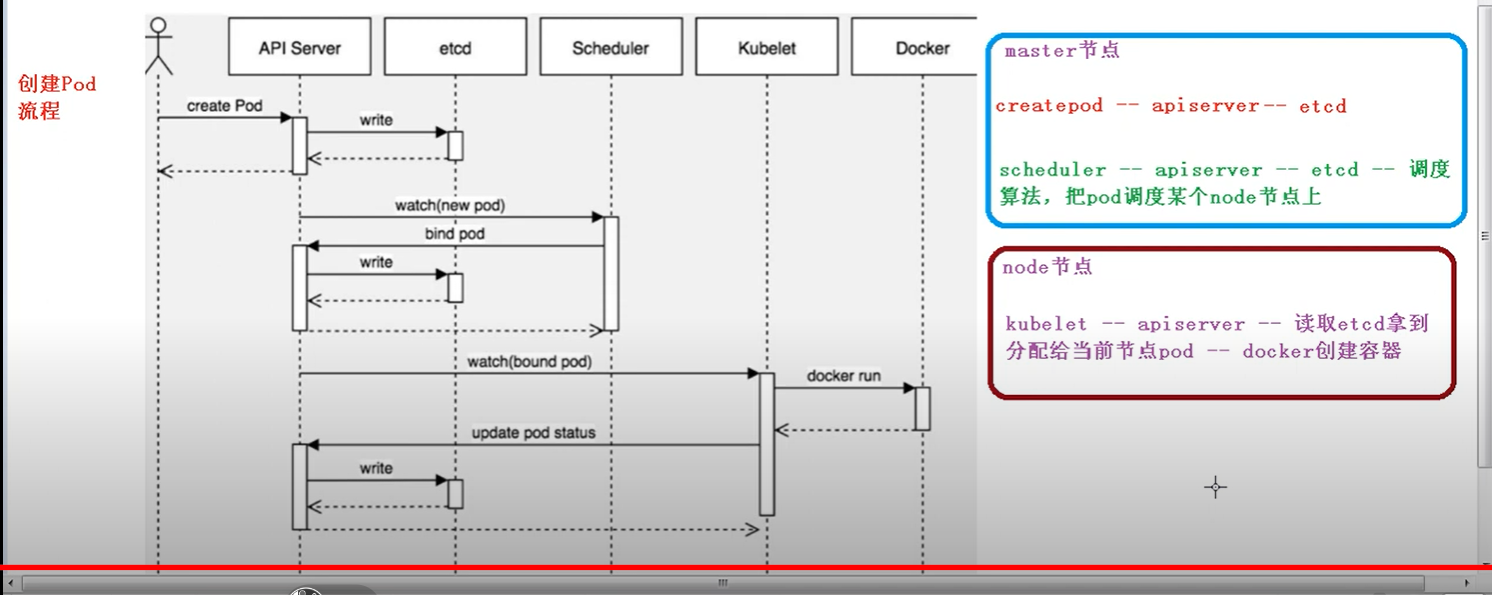

创建pod的流程图

- 在Master节点

- 调用apiserver里createpod,并将信息存入etcd

- 用scheduler 将apiserver里的createserver信息调度到特定node上

- 在node节点

- 使用kubelet 读取apiserver并且监听etcd,看是否有新node分配到当前节点

- 调用docker创建容器

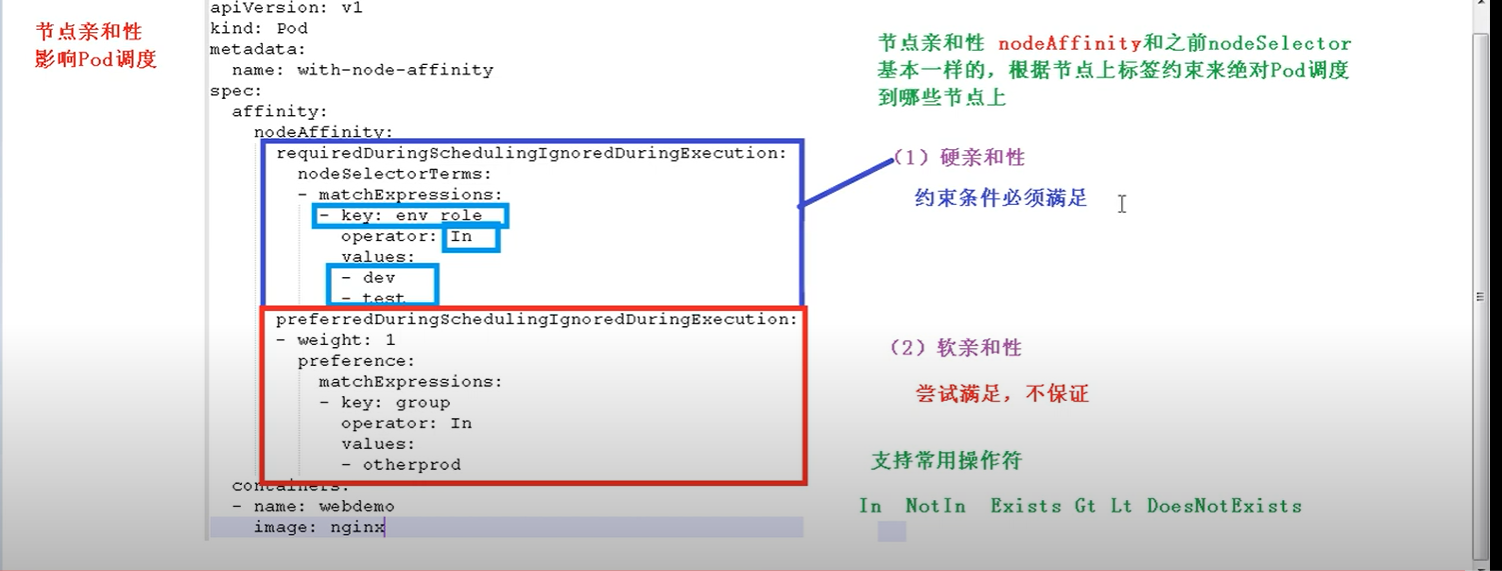

调度影响

- 资源限制

- 节点affinity

- 硬亲和性: requiredDuringSchedulingIgnoredDuringExecution (必须满足)

- 软亲和性: preferredDuringSchedulingIgnoredDuringExecution

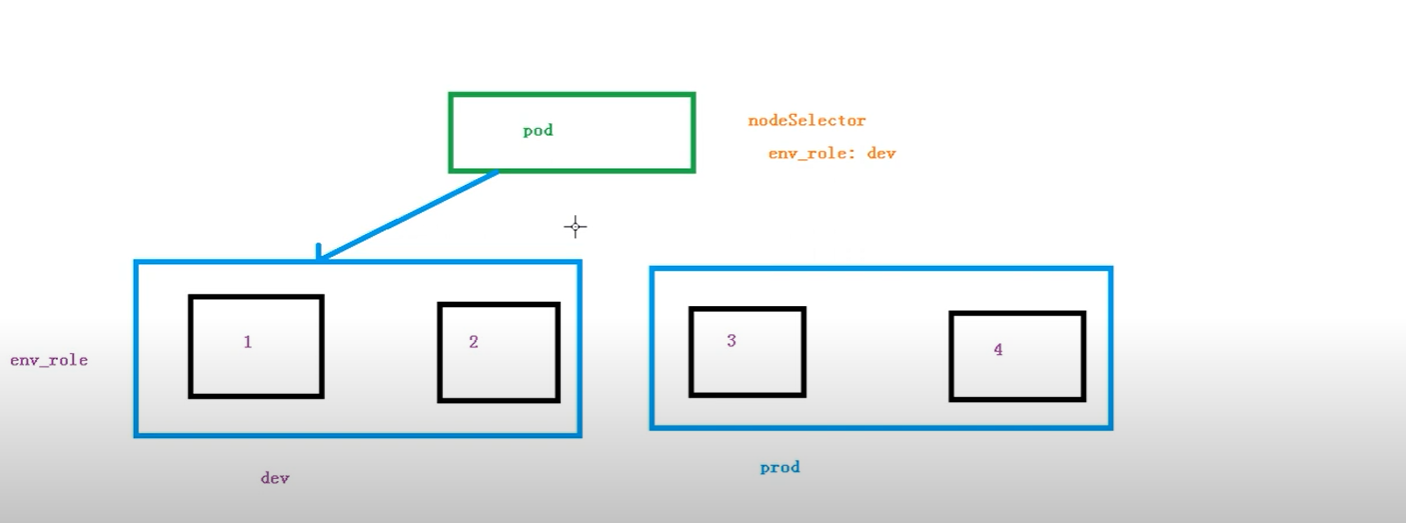

- 节点标签选择器

spec:

nodeSelector:

env_role:dev

1. 如何实现上面的过程

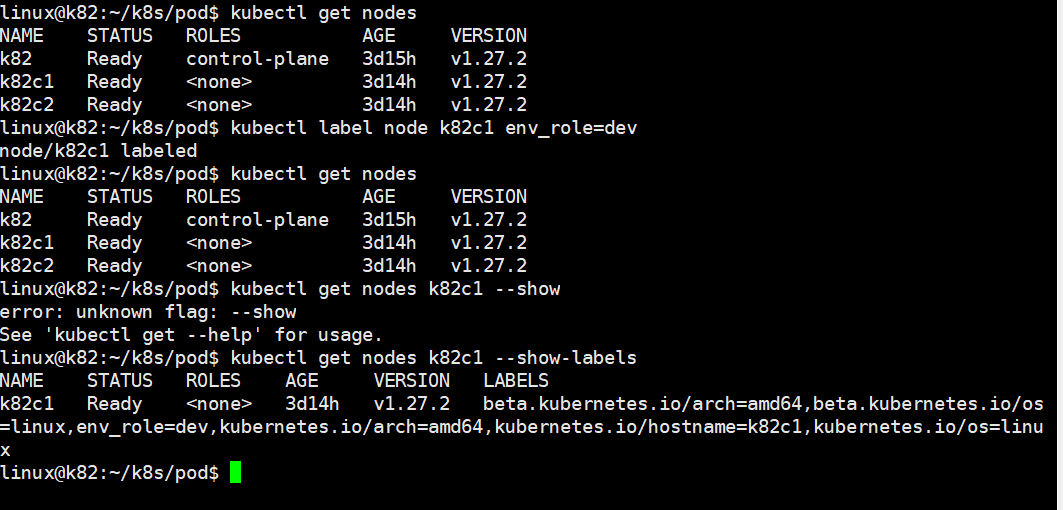

1. 给node1创建标签

```kubectl label node node1 env_role=dev```

2. 使用NodeSelector

调度影响2(污点Taint)

Taint是Node的属性,和上面2种不一样。上面2种是pod的属性

污点的值

- NoSchedule: 这个节点不会被调度

- PreferNoSchedule

- NoExecute: 不会调度,并且会驱逐Node已有的Pod

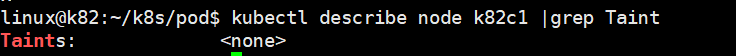

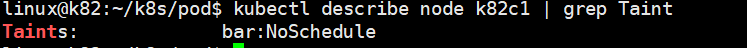

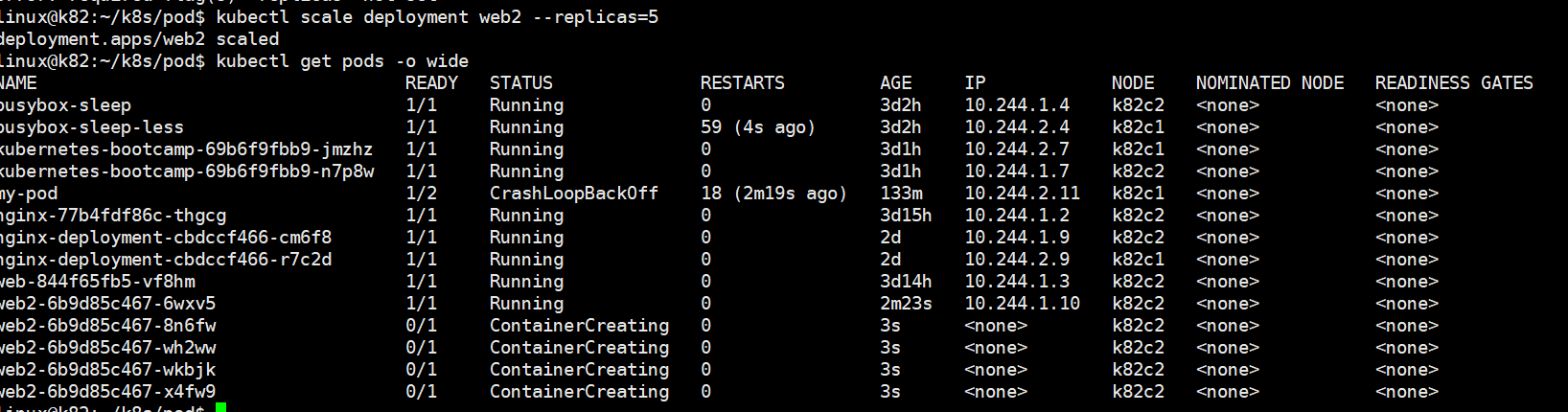

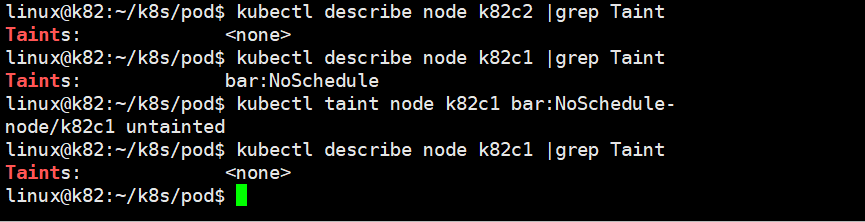

- 查看污点

kubectl describe node k8snode |grep Taint

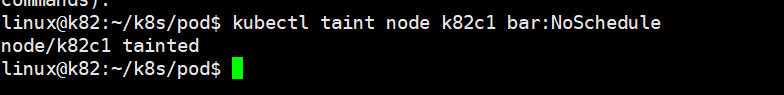

- 添加污点

kubectl taint node k8snode key=value:污点的值

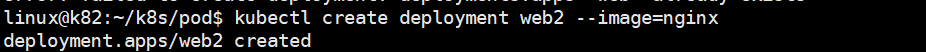

- 创建deployment并且测试,可以发现这里5个复制品都在k82c2这个node上

- 删除Taint

kubectl taint node k82c1 bar:NoSchedule-

污点容忍

设置成五点容忍以后,NoSchedule可能也会被调用

spec:

tolerations:

- key: "key"

operator: "Equal"

value: "value"

effect: "NoSchedule"

containers:

- name: webdemo

image: nginx